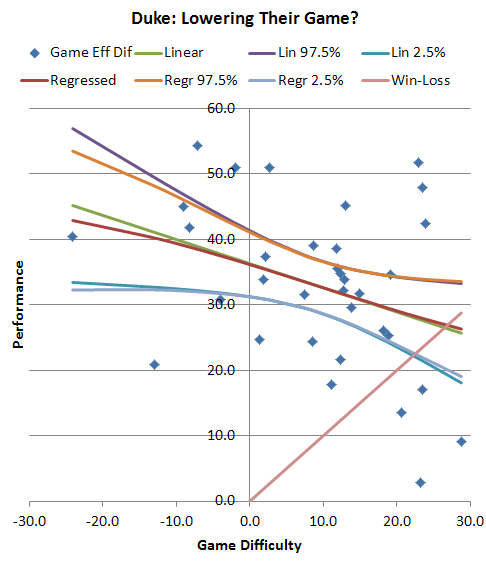

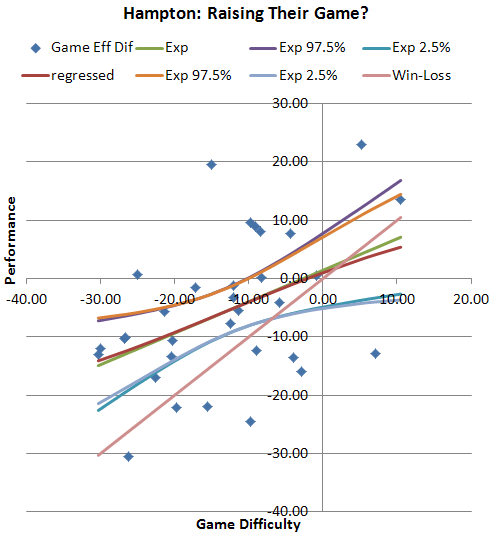

When we get to the NCAA tournament, it seems that inevitably, some teams will raise their game, matching up with the “better” teams, suddenly emerging as a top team. Some teams play well when their opponent is better, and let the foot off the gas when playing East Popcorn St. Those teams tend to be penalized in efficiency-based metrics, the ones that mostly played bad teams and didn’t really care until they played the big boys.

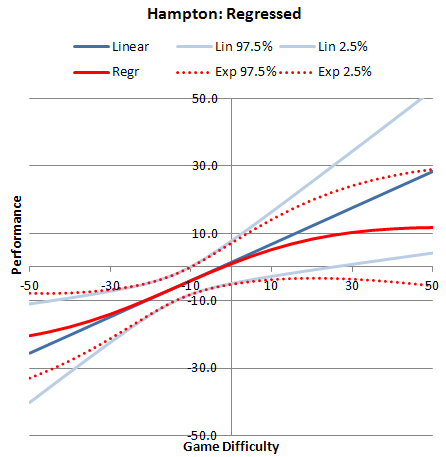

So I’ll start attacking this by doing a linear regression comparing game difficulty (opponent strength + location) to the fully-adjusted performance the team put in. We’ll get a slope and an intercept… but we need more than that; we need to know how significant the results are.

This is where (gasp) the Working-Hotelling Method comes in.

This method allows us to estimate the standard deviation of the result at any point along the line. If we’re way out past where the team has played any games, we really have very little idea how they’ll do. If, on the other hand, they’ve played a lot of games against similar-quality teams, we know fairly well how good they are against such teams.

And, using a little Bayesian magic, we can regress the linear curve derived above to adjust for the error.

Examples:

More on this another time.